How Graphics Cards Affect Power Consumption and Energy Bills

7 August 2025

Ever looked at your electricity bill and thought, “Hold on… why is this suddenly higher than usual?” If you're a PC gamer, a 3D artist, or someone crunching data for hours, the culprit might be hiding inside your rig: your graphics card.

Yeah, that shiny GPU sitting proudly in your system? It's not just turning polygons into jaw-dropping visuals—it's also sipping (or gulping!) down a surprising amount of power. And if you're not paying attention, it's sneakily bumping up those monthly energy costs. Let's pull back the curtain and unpack how graphics cards really affect power consumption and your energy bill.

What’s the Big Deal with Graphics Cards Anyway?

First off, let’s get something straight. Graphics cards are powerful beasts. Compared to your CPU or hard drive, GPUs crank out raw computing performance like a sports car tearing down the freeway. But just like sports cars need more fuel, graphics cards need more juice. And that juice? It’s coming straight from your wall outlet — which ultimately shows up in your utility bill.So, yeah, they matter. A lot.

Breaking Down GPU Power Consumption

Not all GPUs are created equal. Some sip power like they're nursing a latte, others chug electricity like it's a bottomless energy drink.Here’s a quick breakdown of where that power goes:

- Core Performance: The GPU core is the brain of the graphics card. The faster it runs, the more power it demands.

- VRAM (Video RAM): High-performance GPUs come with faster, larger VRAM — and that adds to the power draw.

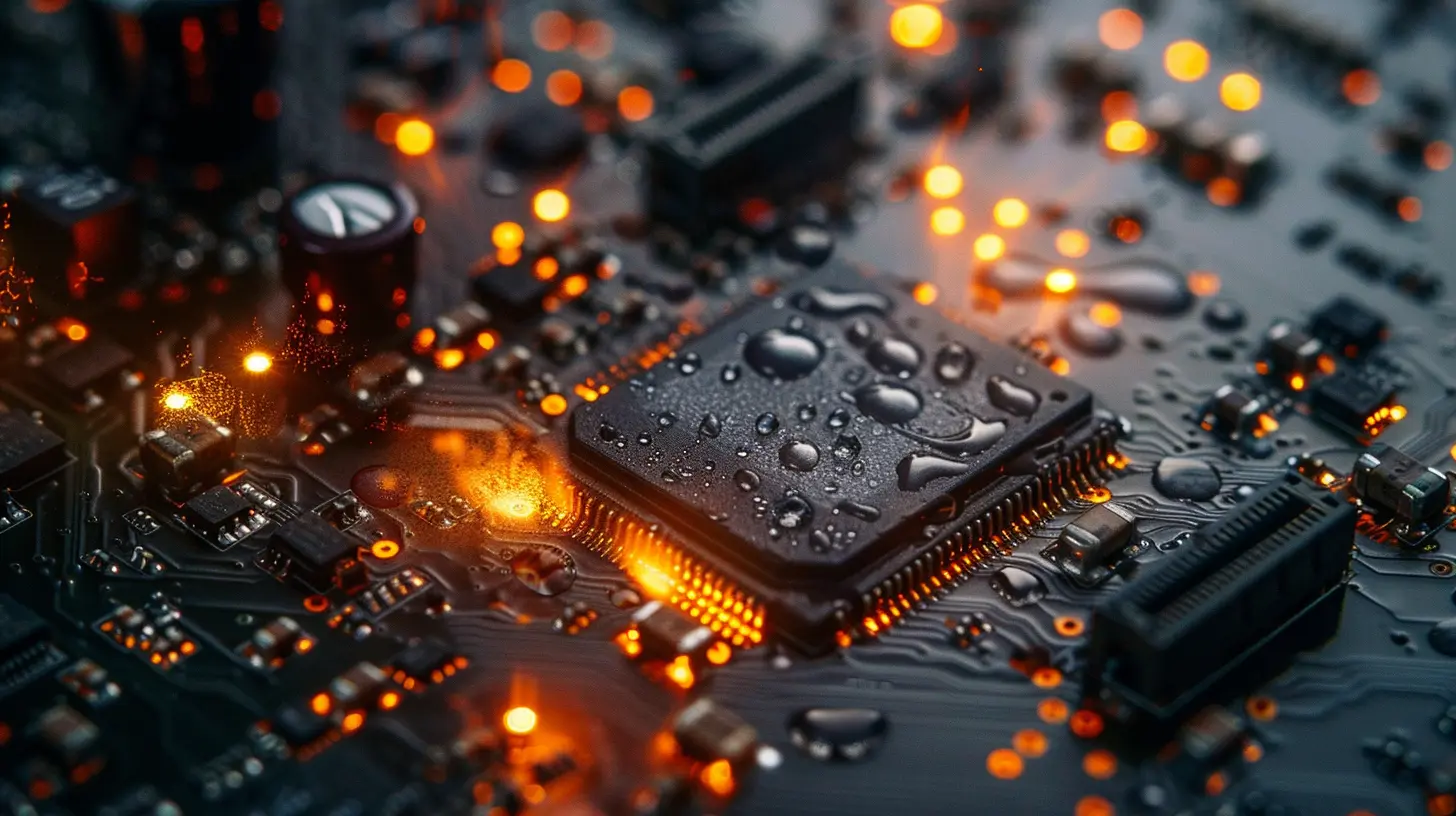

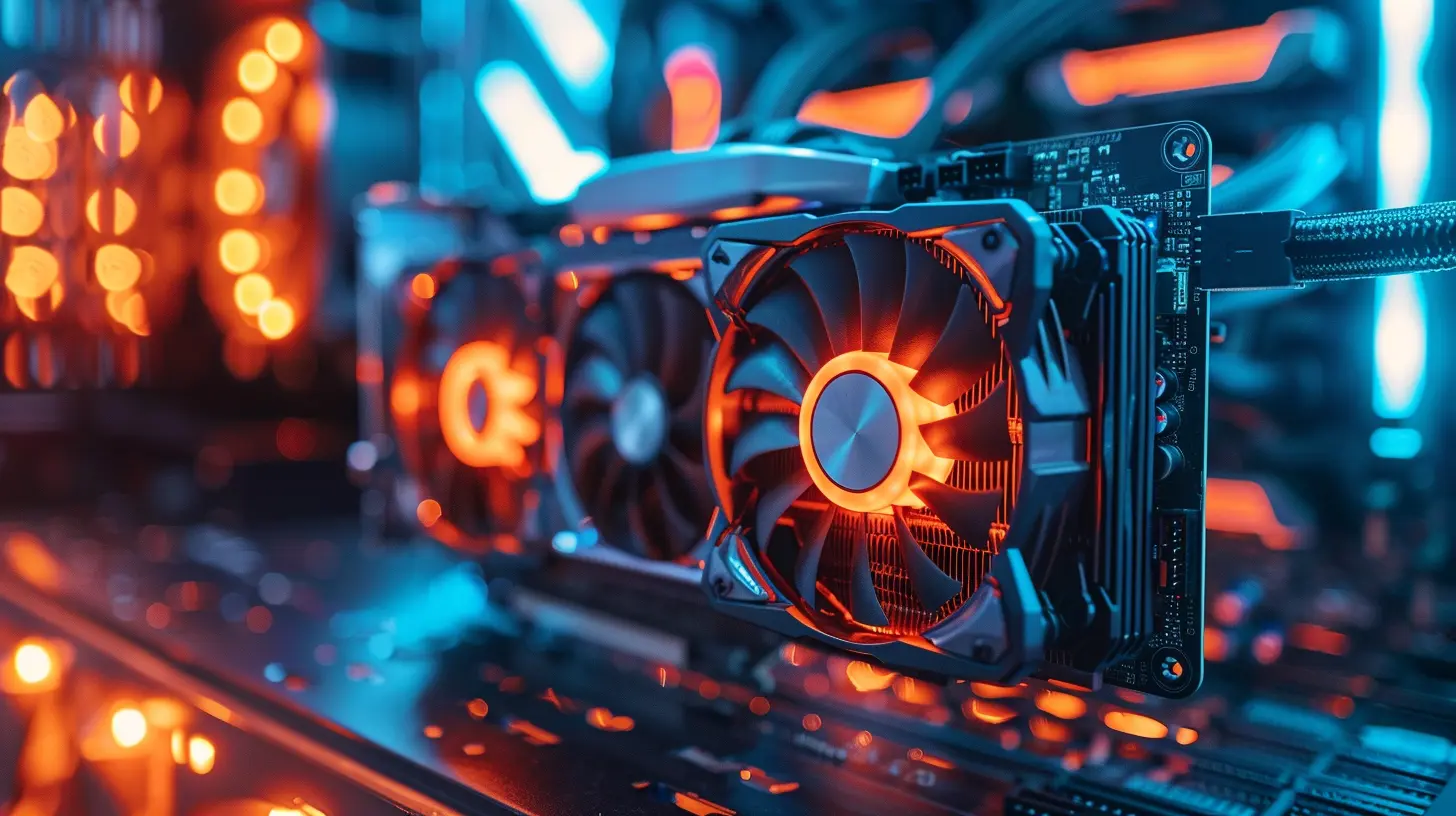

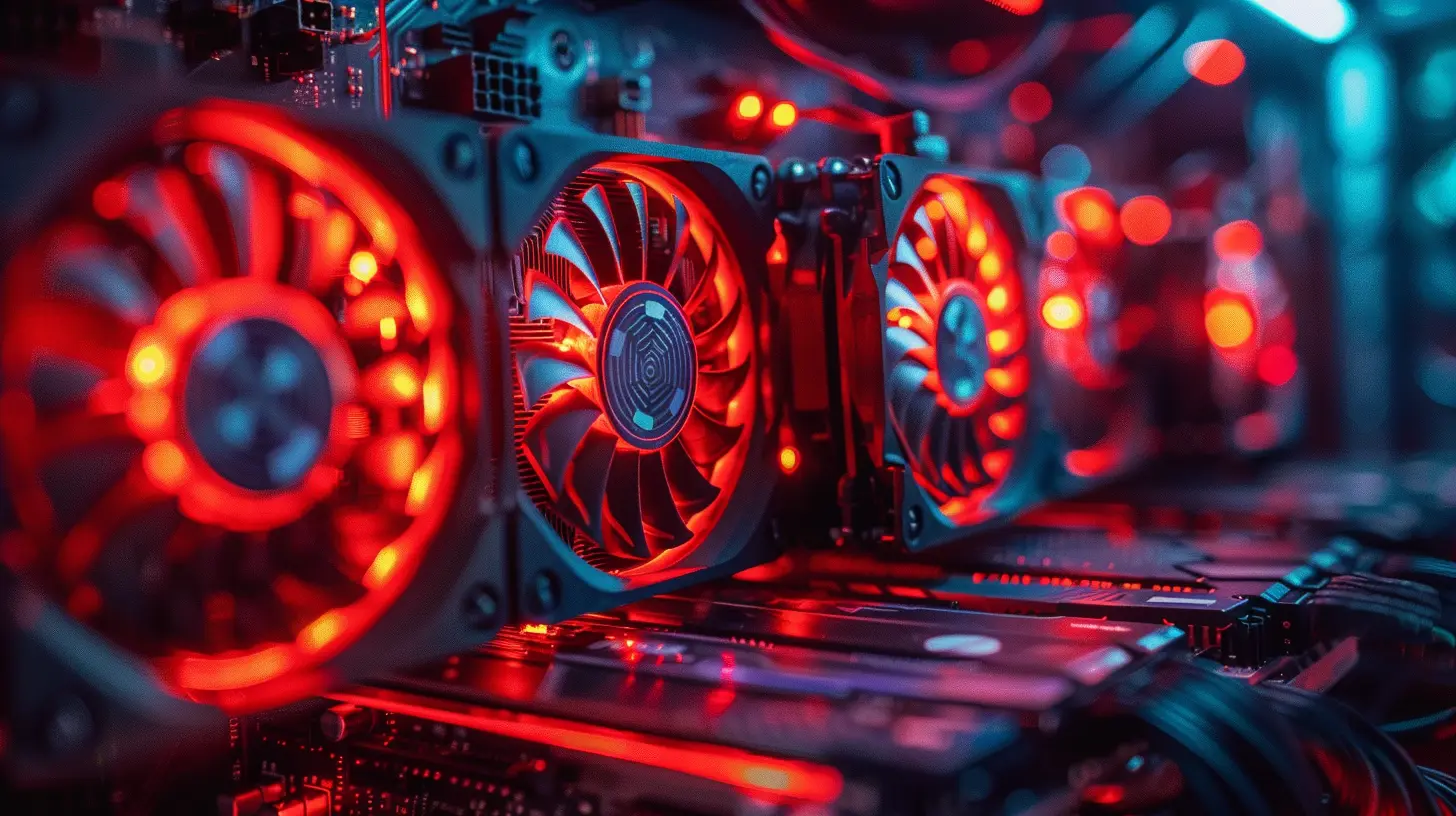

- Cooling Systems: High-end cards often feature dual or triple fans, liquid cooling, or even built-in heaters (just kidding… sort of). These systems require extra power to keep the whole rig from becoming a furnace.

- Lighting and RGB Bling: Not a massive factor, but yes, those pretty lights use power too.

To put it into perspective, a mid-range graphics card might draw around 120–200 watts. A high-end card like NVIDIA’s RTX 4090? That can spike over 450 watts, easily. Throw that into a system with a hungry CPU and peripherals, and you’re looking at a 700+ watt power pull under load. Yikes.

Idle vs Load: When Does the GPU Really Eat Power?

Just because you’ve got a power-hungry GPU doesn’t mean it's always maxing out.- Idle Mode: When you're just browsing the web or watching YouTube, even the biggest GPUs usually draw just 15–30 watts.

- Under Load: Fire up Cyberpunk 2077 on ultra-settings, and your GPU flexes its muscles, pulling in as much power as a small refrigerator.

The thing is, many people leave their PCs running in background while gaming, streaming, or rendering. These longer sessions at peak loads? That’s when your electric bill starts to take a beating.

How Much Does a GPU Really Add to Your Energy Bill?

Let’s crunch a few numbers. Don’t worry, we’ll keep it simple.Take an RTX 4080 drawing 320 watts under full load, used for 4 hours a day. Here's the math:

- 320 watts = 0.32 kilowatts

- 0.32 kW x 4 hours = 1.28 kWh/day

- Over a month (30 days): 1.28 x 30 = 38.4 kWh

- Average cost per kWh in the U.S. = $0.16 (your rate may vary)

- Monthly cost = 38.4 x 0.16 = ~$6.14/month

Now, that doesn’t sound like much, right? But consider this:

- Add power draw from CPU, motherboard, monitor.

- Factor in longer gaming/rendering sessions.

- Running a GPU farm for crypto mining? Multiply that by 10x or more.

Suddenly, it’s not peanuts anymore.

Why High-End GPUs Are Power Hogs

Here’s the truth: performance scales with power. Want ultra ray-traced graphics at 120 FPS? You’ll pay a power premium.High-end GPUs often come with:

- More cores and transistors

- Higher clock speeds

- More VRAM

- Enhanced AI engines & ray tracing cores

All of that screams for more power. NVIDIA even provides power recommendations with their cards nowadays — some need 850W+ PSUs just to run safely.

It’s like stuffing a V12 engine in your hatchback. Sure, it flies, but it guzzles gas like it's free.

Energy Efficiency: It’s Not All Doom and Gloom

Thankfully, GPU makers aren’t just focused on raw power. They’re also improving efficiency.Take NVIDIA’s Ada Lovelace architecture or AMD’s RDNA 3. These newer designs aim to deliver more performance per watt, meaning you can get better gaming or rendering without necessarily burning through more electricity.

In fact, modern mid-tier GPUs can outperform older flagship cards while using far less power. That’s a win in both performance and your energy bill.

Pro tip: Don’t just look at performance benchmarks. Look at the Performance per Watt (like FPS per watt used). That’s where the smart money goes.

How to Check Your GPU’s Power Usage (and Tame It)

Want to take control? Good. Here’s how you can check and reduce your GPU’s power footprint.🔍 Monitoring Tools

You don’t need to guess. Use tools like:- GPU-Z

- MSI Afterburner

- HWMonitor

- NVIDIA/AMD Software Suites

These let you monitor wattage, temperature, fan speeds, and more.

💡 Tips to Reduce GPU Power Draw

1. Undervolt Your GPU: Sounds scary, but it’s safe if done right. With tools like MSI Afterburner, you can drop voltage while keeping performance almost intact.2. Use Frame Rate Caps: Lock your games at 60 or 120 FPS instead of letting them run wild.

3. Enable V-Sync or G-Sync: This reduces unnecessary GPU work when it doesn’t need to push max frames.

4. Lower Graphics Settings: Do you really need ultra everything in every game?

5. Turn Off When Idle: Set your PC to sleep/hibernate after you're done. Don’t let it run for hours doing nothing.

The Hidden Cost: Heat and Cooling

Here’s a sneaky side effect people forget about — heat.The more power your GPU uses, the more heat it generates. This means your cooling fans work harder, or your room gets warmer (and that air conditioning kicks in). Especially if you’re gaming or rendering in summer, this indirect energy usage adds up.

Your graphics card isn’t just drawing power into itself — it's causing a ripple effect across your entire cooling and energy situation. Kind of like tossing a rock into a pond and watching the waves spread.

Work or Play: Different Use Cases, Different Costs

Not everyone uses their GPU the same way. Let’s break it down real quick:- Gamers: Likely to spike usage for 1–6 hours/day with variable loads.

- Content Creators: Video editing, 3D modeling, and rendering can keep the GPU busy for hours.

- Crypto Miners: Continuous 24/7 usage — massive power draw and bills.

- AI Developers/Researchers: Training and inference tasks can chew through power, especially with multiple GPUs.

Your energy bill will reflect your habits. A casual gamer won’t feel the pinch as much as someone mining Ethereum all day.

Future-Proofing: What’s Next?

Energy efficiency is becoming a bigger selling point. Manufacturers are being pushed to develop GPUs that don’t demand a nuclear reactor to run.Some innovations to keep an eye on:

- Smaller manufacturing processes (like 5nm, 3nm tech)

- Advanced AI-based power management

- Dynamic voltage scaling

- Eco-focused GPUs for budget use

Eventually, we might get to a place where high-end performance doesn't come at the cost of a sky-high energy bill. But for now? You’ve got to be smart about it.

So… Is Your GPU Secretly Making You Broke?

Not exactly, but it’s definitely sipping on your wallet behind the scenes. Think of it like a powerful roommate who eats all the snacks and never pitches in for the grocery bill.It’s not a question of avoiding powerful GPUs—but understanding their impact. By being aware of which card you use, how often you stress it, and what tweaks you can make, you can balance performance with energy efficiency.

Whether you're gaming, creating, or mining digital gold, that big metal slab sitting in your rig is more than just a tool — it’s an energy-hungry companion.

Know it. Tame it. And maybe stop wondering where that extra $20 on your energy bill came from.

💬 Final Thoughts

Graphics cards have come a long way, and power consumption is now one of the biggest considerations alongside performance. With energy rates rising and devices getting beefier, it's crucial to understand how your GPU fits into the big picture of your power usage.The good news? You don’t have to sacrifice performance for efficiency. A few smart moves, some energy awareness, and you’ll strike that perfect balance between epic frame rates and sane utility bills.

Next time you hear your graphics card rev up, don’t just admire those beautiful graphics — think about the little watt gremlins running on your dime in the background.

all images in this post were generated using AI tools

Category:

Graphics CardsAuthor:

Pierre McCord

Discussion

rate this article

1 comments

Izaak Cross

Great article! It's fascinating how graphics cards significantly impact power consumption and energy costs. Understanding GPU power ratings, efficiency, and workload demands can help users make informed decisions. Optimizing settings and choosing energy-efficient models can lead to substantial savings in both performance and bills.

August 21, 2025 at 4:53 AM

Pierre McCord

Thank you! I'm glad you found the article insightful. Understanding GPU efficiency is key to balancing performance with energy savings.